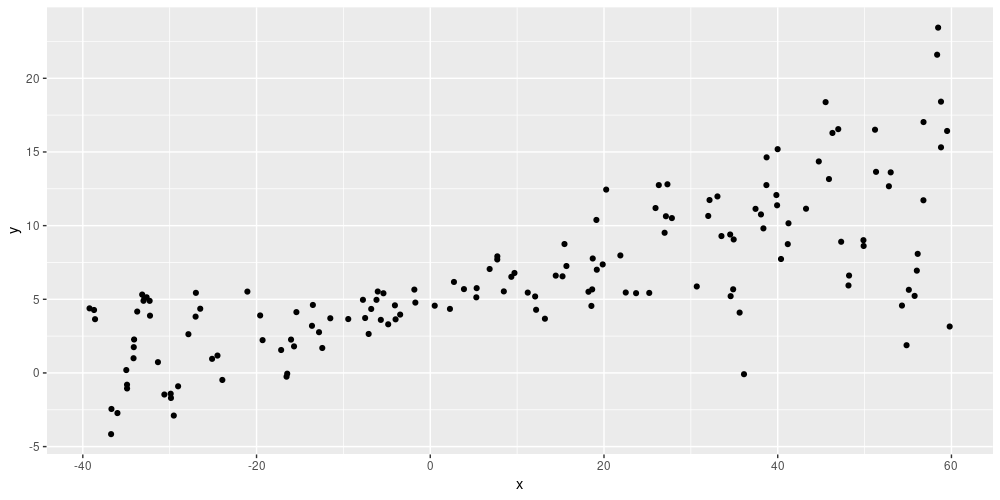

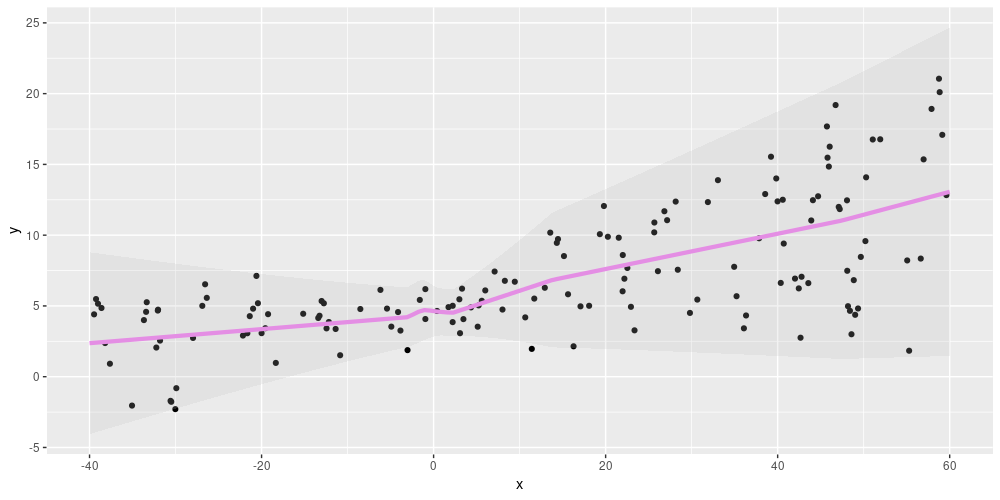

class: middle, center, inverse background-image: url("images/PowerPoint-Backgrounds.jpg") background-position: center background-size: cover # What's new in TensorFlow for R? ### Daniel Falbel ### RStudio --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # What's TensorFlow? TensorFlow is an end-to-end open source platform for machine learning It's specially useful for Deep Learning models: - Fast implementation for common operations (eg. convolutions) in both CPU's, GPU's and even TPU's. - Automatic differentiation - Production ready: you can train and deploy models in multiple cloud services.  --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # TensorFlow for R  The R bindings are spread across multiple packages: - [`tensorflow`](https://github.com/rstudio/tensorflow): provides the `tf` object with access to all TensorFlow API, helps with the installation and other utils. - [`keras`](https://github.com/rstudio/keras): a wrapper of the `tf.keras` package with R-like syntax. This is the recommended way to use TensorFlow. - [`tfdatasets`](https://github.com/rstudio/tfdatasets): a wrapper of the the `tf.data` package for very efficient data input pipelines. And others: [`tfhub`](https://github.com/tfhub), [`tfprobability`](https://github.com/rstudio/tfprobability), [`tfds`](https://github.com/rstudio/tfds), [`tfautograph`](https://github.com/t-kalinowski/tfautograph) & [`autokeras`](https://github.com/r-tensorflow/autokeras) --- class: center, middle background-image: url("images/PowerPoint-Backgrounds3.jpg") background-position: center background-size: cover # What's new? --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tensorflow: Support for TensorFlow 2.0 TensorFlow is a **big** change in the ecosystem. The most important change is the introduction of **Eager Execution**: .pull-left[ Before: ```r # Build a graph. a <- tf$constant(5) b <- tf$constant(6) c <- a * b # Launch the graph in a session. sess = tf$Session() sess$run(c) ##> [1] 30 ``` ] .pull-right[ Now: ```r a <- tf$constant(5) b <- tf$constant(6) c <- a * b print(c) ##> tf.Tensor(30.0, shape=(), dtype=float32) ``` ] Other changes: API cleanup, `tf.function`, No more globals - see [here](https://www.tensorflow.org/guide/effective_tf2#a_brief_summary_of_major_changes) for more info. --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfautograph [R package](https://github.com/t-kalinowski/tfautograph) by [Tomasz Kalinowski](https://github.com/t-kalinowski/). It lets you use tensors in R control flow expressions like `if`, `while` and `for` and automatically translates those expressions into tensorflow graphs. .pull-left[ Before: ```r x <- tf$constant(5) tf$cond( pred = x > tf$constant(0), true_fn = function() tf$square(x), false_fn = function() x ) ##> tf.Tensor(25.0, shape=(), dtype=float32) ``` ] .pull-right[ Now: ```r library(tfautograph) autograph({ if (x > 0) tf$square(x) else x }) ##> tf.Tensor(25.0, shape=(), dtype=float32) ``` ] And I am not showing how to do a for loop without `tfautograph`. Learn more [here](https://t-kalinowski.github.io/tfautograph/articles/tfautograph.html) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfdatasets: Feature spec interface A [recipes](https://github.com/tidymodels/recipes) like interface for pre-processing tabular data for Deep Learning. ```r spec <- feature_spec(hearts_train, target ~ .) %>% step_numeric_column(all_numeric(), normalizer_fn = scaler_standard()) %>% step_categorical_column_with_vocabulary_list(thal) %>% step_embedding_column(thal, dimension = 2) spec <- fit(spec, hearts_train) # fit the spec in the dataset ``` Use it in a Keras model with the new `layer_dense_features`: ```r model <- keras_model_sequential() %>% layer_dense_features(dense_features(spec)) %>% layer_dense(units = 32, activation = "relu") %>% layer_dense(units = 1, activation = "sigmoid") ``` Learn more [here](https://blogs.rstudio.com/tensorflow/posts/2019-07-09-feature-columns/) & [here](https://tensorflow.rstudio.com/guide/tfdatasets/feature_spec/) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfdatasets: minor changes We now support `purrr` style lambda functions in `dataset_*` functions. In the example we are reading images and masks (for an image segmentation task) using tfdatasets. ```r data <- tibble( img = list.files(here::here("data-raw/train"), full.names = TRUE), mask = list.files(here::here("data-raw/train_masks"), full.names = TRUE) ) data <- initial_split(data, prop = 0.8) training_dataset <- training(data) %>% tensor_slices_dataset() %>% dataset_map(~.x %>% list_modify( img = tf$image$decode_jpeg(tf$io$read_file(.x$img)), mask = tf$image$decode_gif(tf$io$read_file(.x$mask)) )) ``` See a full example [here](https://blogs.rstudio.com/tensorflow/posts/2019-08-23-unet/) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfdatasets: minor changes You can also pass a `tf_dataset` directly to the `fit()` function in Keras - `make_iterator_one_shot()` is no longer needed. ```r training_dataset <- create_dataset(training(data)) validation_dataset <- create_dataset(testing(data)) model %>% fit( training_dataset, epochs = 5, validation_data = validation_dataset ) ``` See example [here](https://github.com/r-tensorflow/unet/blob/master/vignettes/carvana.Rmd) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfhub: R interface to TensorFlow Hub Allows you to use pre-trained models available in [tfhub.dev](https://tfhub.dev/) - including text, image and video models. Using tfhub is as easy as using the `layer_hub` Keras layer: ```r model_url <- "https://tfhub.dev/google/tf2-preview/mobilenet_v2/feature_vector/2" input <- layer_input(shape = c(32, 32, 3)) output <- input %>% # we are using a pre-trained MobileNet model! layer_hub(handle = model_url) %>% layer_dense(units = 10, activation = "softmax") model <- keras_model(input, output) ``` See a full example [here](https://tensorflow.rstudio.com/guide/tfhub/hub-with-keras/) & [here](https://tensorflow.rstudio.com/tutorials/beginners/basic-ml/tutorial_basic_text_classification_with_tfhub/) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfhub and recipes tfhub also includes [recipes](https://github.com/tidymodels/recipes) steps - you can use it in your tidymodels workflow: ```r model_url <- "https://tfhub.dev/google/tf2-preview/gnews-swivel-20dim-with-oov/1" rec <- recipe(obscene ~ comment_text, data = train) %>% step_pretrained_text_embedding( comment_text, handle = model_url ) %>% step_bin2factor(obscene) rec <- prep(rec) # fit a logistic regression logistic_fit <- logistic_reg() %>% fit(obscene ~ ., data = juice(rec)) ``` See the full example [here](https://github.com/rstudio/tfhub/blob/master/vignettes/examples/recipes.R) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # keras: Text preprocessing layer Starting in TensorFlow 2.1, Keras provides built-in Text preprocessing layers. It uses only TensorFlow operations making it easier to deploy such models to production. ```r text_vectorization <- layer_text_vectorization( max_tokens = 50000, standardize = "lower_and_strip_punctuation", split = "whitespace", ngrams = NULL, output_mode = "int" # or "binary", "count" & "tfidf", output_sequence_length = 50, pad_to_max_tokens = TRUE, ) # Finds most frequent words and sequennce lenghts in the datasets... text_vectorization %>% adapt(df$text) ``` --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # keras: Text preprocessing layer Use it in your model: ```r input <- layer_input(shape = c(1), dtype = "string") output <- input %>% text_vectorization() %>% layer_embedding(input_dim = num_words + 1, output_dim = 16) %>% layer_global_average_pooling_1d() %>% layer_dense(units = 16, activation = "relu") %>% layer_dropout(0.5) %>% layer_dense(units = 1, activation = "sigmoid") model <- keras_model(input, output) ``` See the full example [here](https://tensorflow.rstudio.com/tutorials/beginners/basic-ml/tutorial_basic_text_classification/) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfprobability TensorFlow Probability is a library for statistical computation and probabilistic modeling built on top of TensorFlow. .pull-left[ ```r model <- keras_model_sequential() %>% layer_dense(units = 8, activation = "relu") %>% layer_dense(units = 2, activation = "linear") %>% layer_distribution_lambda(function(x) { tfd_normal( # use unit 1 of previous layer loc = x[, 1, drop = FALSE], # use unit 2 of previous layer scale = 1e-3 + tf$math$softplus(x[, 2, drop = FALSE]) ) }) ``` ] .pull-right[   ] See the 0.8 release blog post [here](https://blogs.rstudio.com/tensorflow/posts/2019-11-07-tfp-cran/). --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # autokeras [R package](https://github.com/r-tensorflow/autokeras) by [Juan Cruz Rodriguez](https://github.com/jcrodriguez1989) interfacing to AutoKeras - an open source software library for automated machine learning (AutoML). ```r library(autokeras) # Get CIFAR-10 dataset, but not preprocessing needed cifar10 <- dataset_cifar10() c(x_train, y_train) %<-% cifar10$train c(x_test, y_test) %<-% cifar10$test # Create an image classifier, and train 10 different models clf <- model_image_classifier(max_trials = 10) %>% fit(x_train, y_train) # And use it to evaluate, predict clf %>% evaluate(x_test, y_test) clf %>% predict(x_test[1:10, , , ]) ``` See the release blog post for [more info](https://blogs.rstudio.com/tensorflow/posts/2019-04-16-autokeras/) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # tfds It's very **experimental**. R interface to TensorFlow Datasets, allowing you load many public datasets in the friendly tfdatasets format. ```r imdb <- tfds_load( "imdb_reviews:1.0.0", split = list("train[:60%]", "train[-40%:]", "test"), as_supervised = TRUE ) # imdb[[1]] is the first 60% of the training dataset # imdb[[2]] is the last 40% of the training dataset # imdb[[3]] is the test dataset ``` See more in the [tfds github](https://github.com/rstudio/tfds) repository. --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # Model packages We have packaged some commonly used models into R packages for both ease to use and to have advanced model implementations in R: - [gpt2](https://github.com/r-tensorflow/gpt2) - R Interface to OpenAI's GPT-2 model ```r gpt2::gpt2("Hello my name is") ##> is Nicolas Eric Malmersky, born in Marietta, Georgia, in December 1957, baptized by a ##> priest in the New Orleans Liturgy. He had never had a sense of the Mother and this was ##> a young brother ... ``` - [unet](https://github.com/r-tensorflow/unet) - Keras implementation of U-Net using R - [densenet](https://github.com/dfalbel/densenet) - densenet for R There are also community contributed model implementations like: - [RBERT](https://github.com/jonathanbratt/RBERT) - Implementation of BERT in R by [Jonathan Bratt](https://github.com/jonathanbratt) and [Jon Harmon](https://github.com/jonthegeek) --- background-image: url("images/PowerPoint-Backgrounds4.jpg") background-position: center background-size: cover # TensorFlow for R Blog Many, many articles showing the state of the art of TensorFlow and Deep Learning with detailed explanations. .center[  ] --- class: center, middle, inverse background-image: url("images/PowerPoint-Backgrounds.jpg") background-position: center background-size: cover # Thank you! [http://bit.ly/whats-new-in-tf](http://bit.ly/whats-new-in-tf)